The new Opteron 6300: Finally Tested!

by Johan De Gelas on February 20, 2013 12:03 AM ESTJava Server Performance

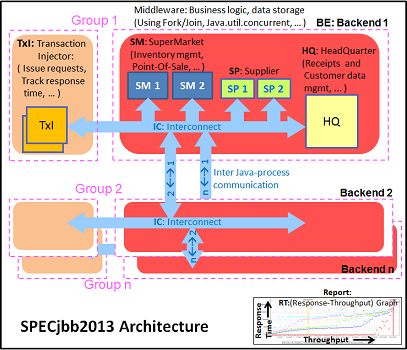

The SPECjbb®2013 benchmark is based on a " usage model based on a world-wide supermarket company with an IT infrastructure that handles a mix of point-of-sale requests, online purchases and data-mining operations". It uses the latest Java 7 features, makes use of XML, compressed communication and messaging with security.

We tested with four groups of transaction injectors and backends. We applied a relatively basic tuning to mimic real world use.

"-server -Xmx4G -Xms4G -Xmn1G -XX:+AggressiveOpts -XX:+UseLargePages-server -Xmx4G -Xms4G -Xmn1G -XX:+AggressiveOpts -XX:+UseLargePages"

With these settings, the benchmark takes about 40GB of RAM.

Since SPECJBB®2013 is very new, we will research the benchmark in more detail later. The first results are very interesting though. Notice how one Opteron 6380 edges out the Xeon 2660. Once we double the amount of CPUs, the Xeon outperforms the best Opteron by 17%. The fact that each Opteron processor is a dual NUMA node is not helping the Opteron. It is clear that the single die or "native octal-core" approach scales better here (for now).

SPECJBB®2013 is a registered trademark of the Standard Performance Evaluation Corporation (SPEC).

55 Comments

View All Comments

coder543 - Wednesday, February 20, 2013 - link

You realize that we have no trouble recognizing that you've posted about fifty comments that are essentially incompetent racism against AMD, right?AMD's processors aren't prefect, but neither are Intel's. And also, AMD, much to your dismay, never announced they were planning to get out of the x86 server market. They'll be joining the ARM server market, but not exclusively. I'm honestly just ready for x86 as a whole to be gone, completely and utterly. It's a horrible CPU architecture, but so much money has been poured into it that it has good performance for now.

Duwelon - Thursday, February 21, 2013 - link

x86 is fine, just fine.coder543 - Wednesday, February 20, 2013 - link

totes, ain't nobody got time for AMD. they is teh failzor.(yeah, that's what I heard when I read your highly misinformed argument.)

quiksilvr - Wednesday, February 20, 2013 - link

Obvious trolling aside, looking at the numbers and its pretty grim. Keep in mind that these are SERVER CPUs. Not only is Intel doing the job faster, its using less energy, and paying a mere $100-$300 more per CPU to cut off on average 20 watts is a no-brainer. These are expected to run 24 hours a day, 7 days a week with no stopping. That power adds up and if AMD has any chance to make any dent in the high end enterprise datacenters they need to push even more.Beenthere - Wednesday, February 20, 2013 - link

You must be kidding. TCO is what enterprise looks at and $100-$300 more per CPU in addition to the increased cost of Intel based hardware is precisely why AMD is recovering server market share.If you do the math you'll find that most servers get upgraded long before the difference in power consumption between an Intel and AMD CPU would pay for itself. The total wattage per CPU is not the actual wattage used under normal operations and AMD has as good or better power saving options in their FX based CPUs as Intel has in IB. The bottom line is those who write the checks are buying AMD again and that's what really counts, in spite of the trolling.

Rory Read has actually done a decent job so far even though it's not over and it has been painful, especially to see some talent and loyal AMD engineers and execs part ways with the company. This happens in most large company reorganizations and it's unfortunate but unavoidable. Those remaining at AMD seem up for the challenge and some of the fruits of their labor are starting to show with the Jaguar cores. When the Steamroller cores debut later this year, AMD will take another step forward in servers and desktops.

Cotita - Wednesday, February 20, 2013 - link

Most servers have a long life. You'll probably upgrade memory and storage, but CPU is rarely upgraded.Guspaz - Wednesday, February 20, 2013 - link

Let's assume $0.10 per kilowatt hour. A $100 price difference at 20W would take 1000 kWh, which would take 50,000 hours to produce. The price difference would pay for itself (at $100) in about 6 years.So yes, the power savings aren't really enough to justify the cost increase. The higher IPC on the Intel chips, however, might.

bsd228 - Wednesday, February 20, 2013 - link

You're only getting part of the equation here. That extra 20w of power consumed mostly turns into heat, which now must be cooled (requiring more power and more AC infrastructure). Each rack can have over 20 2U servers with two processors each, which means nearly an extra kilowatt per rack, and the corresponding extra heat.Also, power costs can vary considerably. I was at a company paying 16-17cents in Oakland, CA. 11 cents in Sacramento, but only 2 cents in Central Washington (hydropower).

JonnyDough - Wednesday, February 20, 2013 - link

+as many as I could give. Best post!Tams80 - Wednesday, February 20, 2013 - link

I wouldn't even ask the NYSE for the time day.