The Apple iPad Pro Review

by Ryan Smith, Joshua Ho & Brandon Chester on January 22, 2016 8:10 AM ESTSoC Analysis: On x86 vs ARMv8

Before we get to the benchmarks, I want to spend a bit of time talking about the impact of CPU architectures at a middle degree of technical depth. At a high level, there are a number of peripheral issues when it comes to comparing these two SoCs, such as the quality of their fixed-function blocks. But when you look at what consumes the vast majority of the power, it turns out that the CPU is competing with things like the modem/RF front-end and GPU.

x86-64 ISA registers

Probably the easiest place to start when we’re comparing things like Skylake and Twister is the ISA (instruction set architecture). This subject alone is probably worthy of an article, but the short version for those that aren't really familiar with this topic is that an ISA defines how a processor should behave in response to certain instructions, and how these instructions should be encoded. For example, if you were to add two integers together in the EAX and EDX registers, x86-32 dictates that this would be equivalent to 01d0 in hexadecimal. In response to this instruction, the CPU would add whatever value that was in the EDX register to the value in the EAX register and leave the result in the EDX register.

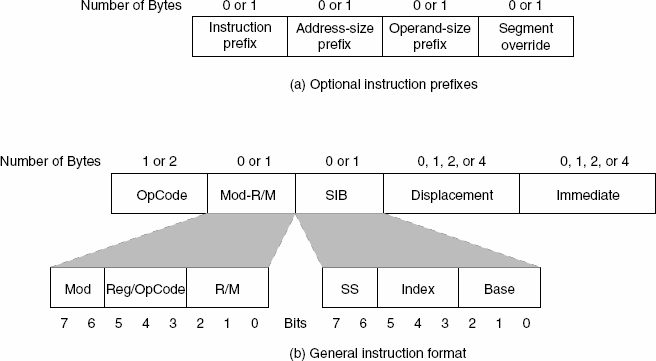

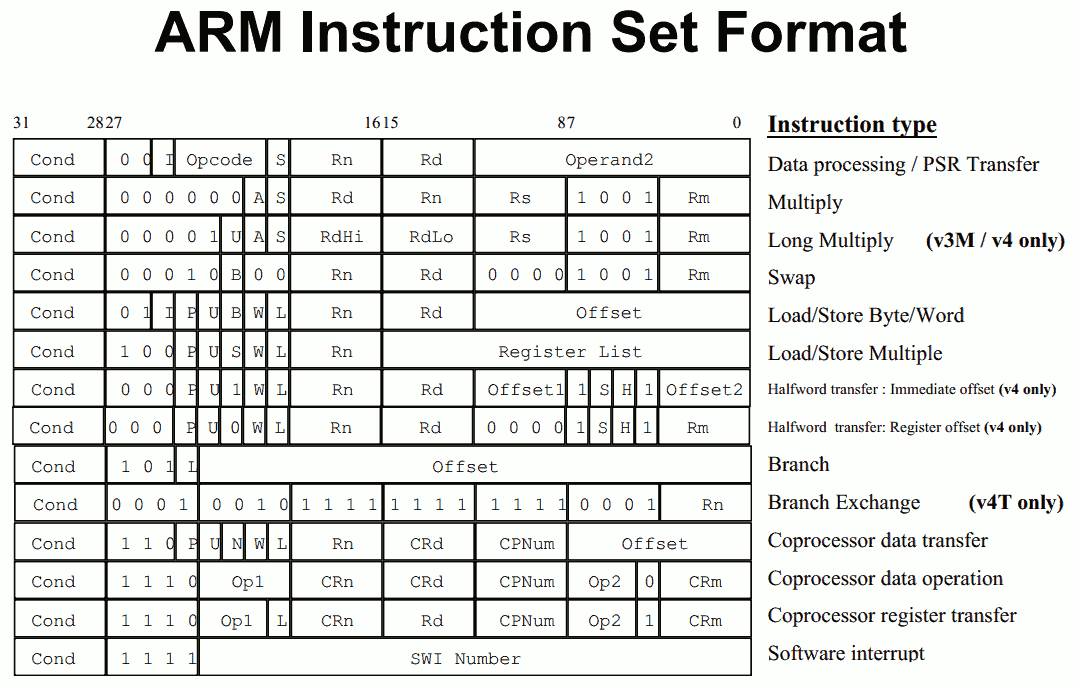

The fundamental difference between x86 and ARM is that x86 is a relatively complex ISA, while ARM is relatively simple by comparison. One key difference is that ARM dictates that every instruction is a fixed number of bits. In the case of ARMv8-A and ARMv7-A, all instructions are 32-bits long unless you're in thumb mode, which means that all instructions are 16-bit long, but the same sort of trade-offs that come from a fixed length instruction encoding still apply. Thumb-2 is a variable length ISA, so in some sense the same trade-offs apply. It’s important to make a distinction between instruction and data here, because even though AArch64 uses 32-bit instructions the register width is 64 bits, which is what determines things like how much memory can be addressed and the range of values that a single register can hold. By comparison, Intel’s x86 ISA has variable length instructions. In both x86-32 and x86-64/AMD64, each instruction can range anywhere from 8 to 120 bits long depending upon how the instruction is encoded.

At this point, it might be evident that on the implementation side of things, a decoder for x86 instructions is going to be more complex. For a CPU implementing the ARM ISA, because the instructions are of a fixed length the decoder simply reads instructions 2 or 4 bytes at a time. On the other hand, a CPU implementing the x86 ISA would have to determine how many bytes to pull in at a time for an instruction based upon the preceding bytes.

A57 Front-End Decode, Note the lack of uop cache

While it might sound like the x86 ISA is just clearly at a disadvantage here, it’s important to avoid oversimplifying the problem. Although the decoder of an ARM CPU already knows how many bytes it needs to pull in at a time, this inherently means that unless all 2 or 4 bytes of the instruction are used, each instruction contains wasted bits. While it may not seem like a big deal to “waste” a byte here and there, this can actually become a significant bottleneck in how quickly instructions can get from the L1 instruction cache to the front-end instruction decoder of the CPU. The major issue here is that due to RC delay in the metal wire interconnects of a chip, increasing the size of an instruction cache inherently increases the number of cycles that it takes for an instruction to get from the L1 cache to the instruction decoder on the CPU. If a cache doesn’t have the instruction that you need, it could take hundreds of cycles for it to arrive from main memory.

Of course, there are other issues worth considering. For example, in the case of x86, the instructions themselves can be incredibly complex. One of the simplest cases of this is just some cases of the add instruction, where you can have either a source or destination be in memory, although both source and destination cannot be in memory. An example of this might be addq (%rax,%rbx,2), %rdx, which could take 5 CPU cycles to happen in something like Skylake. Of course, pipelining and other tricks can make the throughput of such instructions much higher but that's another topic that can't be properly addressed within the scope of this article.

By comparison, the ARM ISA has no direct equivalent to this instruction. Looking at our example of an add instruction, ARM would require a load instruction before the add instruction. This has two notable implications. The first is that this once again is an advantage for an x86 CPU in terms of instruction density because fewer bits are needed to express a single instruction. The second is that for a “pure” CISC CPU you now have a barrier for a number of performance and power optimizations as any instruction dependent upon the result from the current instruction wouldn’t be able to be pipelined or executed in parallel.

The final issue here is that x86 just has an enormous number of instructions that have to be supported due to backwards compatibility. Part of the reason why x86 became so dominant in the market was that code compiled for the original Intel 8086 would work with any future x86 CPU, but the original 8086 didn’t even have memory protection. As a result, all x86 CPUs made today still have to start in real mode and support the original 16-bit registers and instructions, in addition to 32-bit and 64-bit registers and instructions. Of course, to run a program in 8086 mode is a non-trivial task, but even in the x86-64 ISA it isn't unusual to see instructions that are identical to the x86-32 equivalent. By comparison, ARMv8 is designed such that you can only execute ARMv7 or AArch32 code across exception boundaries, so practically programs are only going to run one type of code or the other.

Back in the 1980s up to the 1990s, this became one of the major reasons why RISC was rapidly becoming dominant as CISC ISAs like x86 ended up creating CPUs that generally used more power and die area for the same performance. However, today ISA is basically irrelevant to the discussion due to a number of factors. The first is that beginning with the Intel Pentium Pro and AMD K5, x86 CPUs were really RISC CPU cores with microcode or some other logic to translate x86 CPU instructions to the internal RISC CPU instructions. The second is that decoding of these instructions has been increasingly optimized around only a few instructions that are commonly used by compilers, which makes the x86 ISA practically less complex than what the standard might suggest. The final change here has been that ARM and other RISC ISAs have gotten increasingly complex as well, as it became necessary to enable instructions that support floating point math, SIMD operations, CPU virtualization, and cryptography. As a result, the RISC/CISC distinction is mostly irrelevant when it comes to discussions of power efficiency and performance as microarchitecture is really the main factor at play now.

408 Comments

View All Comments

MathieuLF - Monday, January 25, 2016 - link

Obviously you don't actually work in a real office where they require lots of specialized software. What's the point of having one device to complement another? That's a waste of resources.LostAlone - Tuesday, January 26, 2016 - link

Totally agree. For a device to really be useful on a professional level then it needs to be something that is useful all the time, not just when it suits it. If you already wanted a tablet for professional stuff to begin with (a pretty shaky assumption since pro users tend to be working in one place where proper keyboard and mouse are usable) then you need a tablet that can be the only device you need to work on. You need something that you can put in your bag and know that whenever you arrive somewhere you are going to have every single tool you need. And that is not the iPad Pro. It's not even close to replacing an existing laptop. It's certainly very sexy and shiny and the big screen makes it great for reading comics and watching videos on the train but it's not a work device. It's simply not. Even in the only field where it might have claim to being 'pro' (drawing) it's not. It's FAR worse than a proper Wacom tablet because the software is so hugely lacking.It's an iPad dressed up like a grown up device laying in the shadow of actual professional grade devices like the Surface Pro. You get a Surface then you can use it 24/7 for work. You can buy a dock for it and use it as your primary computer. You can get every single piece of software on your desktop plus anything your employer needs and it's easy for your work sysadmins to include it in their network because it's just another Windows PC. Just dumb stuff like iPads refusing to print on networked printers (which happens ALL the time by the way) exclude it from consideration in a professional space. It's a great device for traditional tablet fare but it's not a pro device.

Constructor - Tuesday, January 26, 2016 - link

Your straw man scenarios are just that. In reality most users don't need every last exotic niche feature of any given dinosaur desktop software as a praeconditio sine qua non.Which you also might be able to deduce from the fact that most of those features had not been present on these desktop applications either when "everybody" nevertheless used them professionally even so.

In real life the physical flexibility and mobility of an iPad will often trump exotic software features (most bread-and-butter stuff is very much supported on iOS anyway) where the circumstances simply call for it.

Actual professionals have always made the difference notably by finding pragmatic ways to make the best use of the actual tools at hand instead of just whining about theoretical scenarios from their parents' basements for sheer lack of competence and imagination.

kunalnanda - Wednesday, January 27, 2016 - link

Just saying, but most office workers use a LOT more than simply notetaking, email, wordprocessor and calendar.Demigod79 - Friday, January 22, 2016 - link

Software companies only create crippled, lesser versions of their software because of the mobile interface. Products like the iPad are primarily touch-based devices so apps must be simplified for touch. Although the iPad supports keyboard input (and have for years) you cannot navigate around the OS using the keys, keyboard shortcuts are few and far between and and it still has touch features like autocorrect (and of course the iPad does not support a trackpad or mouse so you must necessarily touch the screen). The iPad Pro does not change this at all so there's no reason why software companies should bring their full productivity suite to this device. By comparison, PC software developers can rely on users having a keyboard and mouse (and now touchscreens as well for laptops and hybrids) so they can create complex, full-featured software. This is the primary difference between mobile and desktop apps. Just like FPS games must be watered down and simplified to make them playable with a touchscreen, productivity apps must also be watered down to be usable. No amount of processing power will change this, and unless the iPad Pro supports additional input devices (at the very least a mouse or trackpad) it will remain largely a consumption device.gistya - Sunday, January 24, 2016 - link

This is just wrong. There are many fully-fledged applications available on iPad. It comes with a decent office suite, plus Google's is free for it as well. I haven't touched MS Word in a couple of years, and when I do it seems like a step backwards in time to a former, crappier era of bloatware.Sure, I still use Photoshop and Maya and Pro Tools on my Mac, but guess what? iPad has been part of my professional workflow for four years now, and I would not go back.

Ask yourself: is a secondary monitor a "professional tool"? Heck yes. What about a third or fourth? What if it fits in your hand, runs on batteries, and has its own OS? Now you cannot find a professional use?

Give me a break. People who are not closed-minded, negative dolts already bought millions of iPads and will keep buying them because of how freaking useful they are, professionally and non-professionally. They will only keep getting more useful as time goes on.

The main legit critique I've heard is a lack of availability for accessories but that's a production issue, not a product issue. iOS 9 has been out for only a few months and the iPad Pro much less than that... companies have to actually have the device in-hand before they can test and develop on it. Check back in another year or two if it's not up to speed for you yet though.

That's what I did, waited for iPad 3, iPhone 3gs before I felt they were ready enough. 3gs for its day was by far the best thing out, so was the 4s. I don't think the iPad Pro is nearly as far back as the iPad 1 or iPhone 1 were when they launched but give it time...

xthetenth - Monday, January 25, 2016 - link

If you consider features to be bloat, I guess you could say that the iPad has full-fledged applications. It's not true, but you could say it. Actually trying to do even reasonably basic tasks in google sheets is horrifying next to desktop Excel. Things like straightforward conditional formatting, pivot tables, the ability to dynamically order the contents of a table and so on might strike you as bloat but they're the foundation of the workflows of people who make the program the cornerstone of their job. An extra monitor is a much better professional tool if it doesn't have its own special snowflake OS with different limitations and way of moving data.Relic74 - Saturday, February 27, 2016 - link

The fffice suits available aren't fully fledged applications, they are still just mobile apps. I can't open 80% of my Excel sheets on the iPad Pro simply because it doesn't support Macros. Visual Basic and Databases. Stop trying to convince everyone that the iPad Pro is a laptop replacement, it's not. It's just a bigger iPad, that's all. Which is fine and has it's uses, but the only people who would use the iPad Pro as an actual computer are the same ones who could get by using a ChromeBook. A professional person could use the iPad Pro, yes but they would have a focused purpose, which means only a few specific apps, the rest of time would be spent on a desktop or laptop computer. It's a secondary device.Relic74 - Saturday, February 27, 2016 - link

Their not crippled, just mobile versions and they have their uses. The problem I have with these comments is when someone says that the iPad Pro is immensely better than the Surface Pro 4. These are completely different devices intended for completely different tasks. These comparisons honestly need to stop, one doesn't buy a Surface Pro 4 to use as a tablet and vice versa. The iPad Pro is a content consumption device first and foremost. Yes there are some productivity apps and certain professions like a musician or an artist could take advantage of the iPad Pro's capabilities however it is not and I cannot stress this enough, is not a laptop replacement . Those that can use the iPad Pro as a laptop are the same types of people who can just as easily get by using a ChromeBook. The iPad Pro is a secondary device where as the Surface Pro is a primary device.IOS is just to limited in it's capabilities to be even considered as a standalone professional device. No, an Architect would not use the iPad Pro to design an house with, the Architect might use one to show off the plans to a client, mark down corrections with the Pencil. No, a programmer would not use the iPad Pro to develope on, he however might use one to create an outline of what needs to be done or even as a second monitor for his laptop. No, a musician would not use one to create his album with, he might use one as the brain for one of his synthesizer, a recording device, instrument, etc. it's a companion device, it's an iPad, nothing wrong with that but stop trying to convince everyone that it's some super computer with fantasy powers. Just the file system issue alone should be enough to tell you that the iPad Pro isn't really meant for connect creation.

FunBunny2 - Friday, January 22, 2016 - link

-- Modern software is very bloated memory consumption wise, especially software relying on managed languages, the latter are also significantly slower in terms of performance than languages like C or C++.well, that was true back in the days of DOS. since windoze, 80% (or thereabouts) of programs just call windoze syscalls, which is largely C++.