Investigating Cavium's ThunderX: The First ARM Server SoC With Ambition

by Johan De Gelas on June 15, 2016 8:00 AM EST- Posted in

- SoCs

- IT Computing

- Enterprise

- Enterprise CPUs

- Microserver

- Cavium

Java Performance

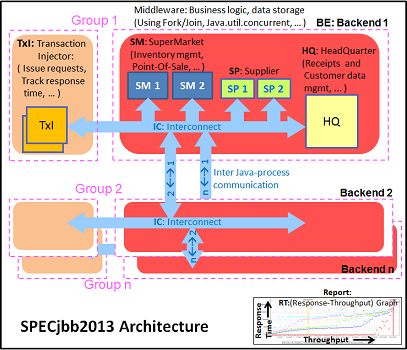

The SPECjbb 2015 benchmark has "a usage model based on a world-wide supermarket company with an IT infrastructure that handles a mix of point-of-sale requests, online purchases, and data-mining operations." It uses the latest Java 7 features and makes use of XML, compressed communication, and messaging with security.

We tested with four groups of transaction injectors and backends. The Java version was OpenJDK 1.8.0_91.

We applied relatively basic tuning to mimic real-world use, while aiming to fit everything inside a server with 64 GB of RAM (to be able to compare to lower end systems):

"-server -Xmx8G -Xms8G -Xmn4G -XX:+AlwaysPreTouch -XX:+UseLargePages"

With these settings, the benchmark takes about 43-55GB of RAM. Java tends to consume more RAM as more core/threads are involved. Therefore, we also tested the Xeon E5-2640v4 and ThunderX with these settings:

"-server -Xmx24G -Xms24G -Xmn16G -XX:+AlwaysPreTouch -XX:+UseLargePages"

The setting above uses about 115 GB. The labels "large" ("large memory footprint") report the performance of these settings. We did not give the Xeon D-1581 the same treatment as we wanted to mimic the fact that the Xeon has only 4 DIMM slots, while the Xeon E5 and ThunderX have (at least) eight.

The first metric is basically maximum throughput.

Notice how the Xeon D-1581 beats the Xeon E5-2640 in a typical throughput situation by 8%, while the SPECint_rate numbers told us that the Xeon E5 would be slightly faster. It is a typical example of how running parallel instances overemphasizes bandwidth. The extra 6 cores (@2.1 GHz) push the Xeon D past the Xeon E5 (10 cores@ 2.6 GHz) despite the fact that the Xeon D has only half the bandwidth available.

The ThunderX offers low end Xeon E5 performance, but that still a lot better than what we would have expected from the SPECint_rate numbers (Dual socket ThunderX = Xeon-D). Once we offer more memory to ThunderX, performance goes up by 14%. The Xeon E5 gets a 10% performance boost.

The Critical-jOPS metric is a throughput metric under response time constraint.

The critical jOPS is the most important metric as it shows how many requests can be served in a timely manner. At first we though that the lack of single threaded performance to run the heavier pieces of Java code fast enough is what made the ThunderX so much slower than the rest of the pack.

However, the 48 threads were mostly hindered by the lack of memory per thread. Once we offer enough memory to the 48-headed ThunderX, performance explodes: it is multiplied by 2.4x! The Xeon E5 benefits too, but performance is "only" 60% higher. Thanks to the DRAM breathing room, the ThunderX moves from "slower than low end Xeon D" to "midrange Xeon E5" territory.

82 Comments

View All Comments

vivs26 - Wednesday, June 15, 2016 - link

Not necessarily - (read Amdahl's law of diminishing returns). The performance actually depends on the workload. Having a million cores guarantees nothing in terms of performance unless the workload is parallelizable which in the real world is not as much as we think it could be. I'm curious to see how xeon merged with altera programmable fabric performs than ARM on a server.maxxbot - Wednesday, June 22, 2016 - link

Technically true but every generation that millstone gets a little smaller, the die area and power needed to translate x86 into uops isn't huge and reduces every generation.jardows2 - Wednesday, June 15, 2016 - link

Interesting. Faster in a few workloads where heavy use of multi-thread is important, but significantly slower in more single thread workloads. For server use, you don't always want parallelized tasks. The results are pretty much across the board for all the processors tested: If the ThunderX was slower, it was slower than all the Intel chips. If it were faster, it was faster than all but the highest end Intel Chips. With the price only being slightly lower than the cheapest Intel chip being sold, I don't think this is going to be a Xeon competitor at all, but will take a few niche applications where it can do better.With no significant energy savings, we should be looking forward to the ThunderX2 to see if it will bring this into a better alternative.

ddriver - Wednesday, June 15, 2016 - link

There is hardly a server workload where you don't get better throughput by throwing more cores and servers at it. Servers are NOT about parallelized task, but about concurrent tasks. That's why while desktops are still stuck at 8 cores, server chips come with 20 and more... Server workloads are usually very simple, it is just that there is a lot of them. They are so simple and take so little time it literally makes no sense parallelizing them.jardows2 - Wednesday, June 15, 2016 - link

In the scenario you described, the single-thread performance takes on even more importance, thus highlighting the advantage the Xeon's currently have in most server configurations.niva - Wednesday, June 15, 2016 - link

Not if the Xeon doesn't have enough cores to actually process 40+ singlethreaded tasks con-currently.hechacker1 - Wednesday, June 15, 2016 - link

But kernels and VMWare know how to schedule multiple threads on 1 core if it's not being fully utilized. Single threaded IPC can make up for not having as many cores. See the iPhone SoCs for another example.ddriver - Wednesday, June 15, 2016 - link

Not if you have thousands of concurrent workloads and only like 8 cores. As fast as each core might be, the overhead from workload context switching will eat it up.willis936 - Thursday, June 16, 2016 - link

Yeah if each task is not significantly longer than a context switch. Context switches are very fast, especially with processors with many sets of SMT registers per core.ddriver - Thursday, June 16, 2016 - link

If what you suggest is correct, then intel would not be investing chip TDP in more cores but higher clocks and better single threaded performance. Clearly this is not the case, as they are pushing 20 cores at the fairly modest 2.4 Ghz.