The Apple iPad Pro Review

by Ryan Smith, Joshua Ho & Brandon Chester on January 22, 2016 8:10 AM ESTSoC Analysis: On x86 vs ARMv8

Before we get to the benchmarks, I want to spend a bit of time talking about the impact of CPU architectures at a middle degree of technical depth. At a high level, there are a number of peripheral issues when it comes to comparing these two SoCs, such as the quality of their fixed-function blocks. But when you look at what consumes the vast majority of the power, it turns out that the CPU is competing with things like the modem/RF front-end and GPU.

x86-64 ISA registers

Probably the easiest place to start when we’re comparing things like Skylake and Twister is the ISA (instruction set architecture). This subject alone is probably worthy of an article, but the short version for those that aren't really familiar with this topic is that an ISA defines how a processor should behave in response to certain instructions, and how these instructions should be encoded. For example, if you were to add two integers together in the EAX and EDX registers, x86-32 dictates that this would be equivalent to 01d0 in hexadecimal. In response to this instruction, the CPU would add whatever value that was in the EDX register to the value in the EAX register and leave the result in the EDX register.

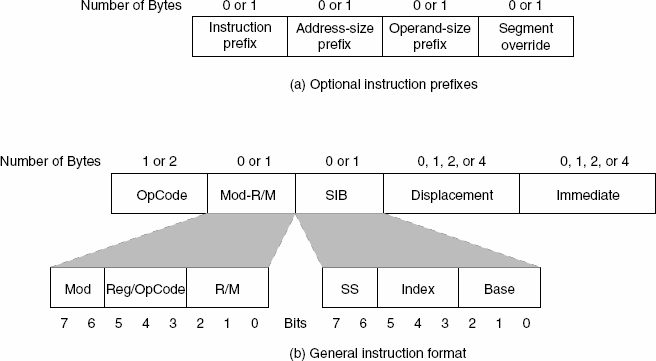

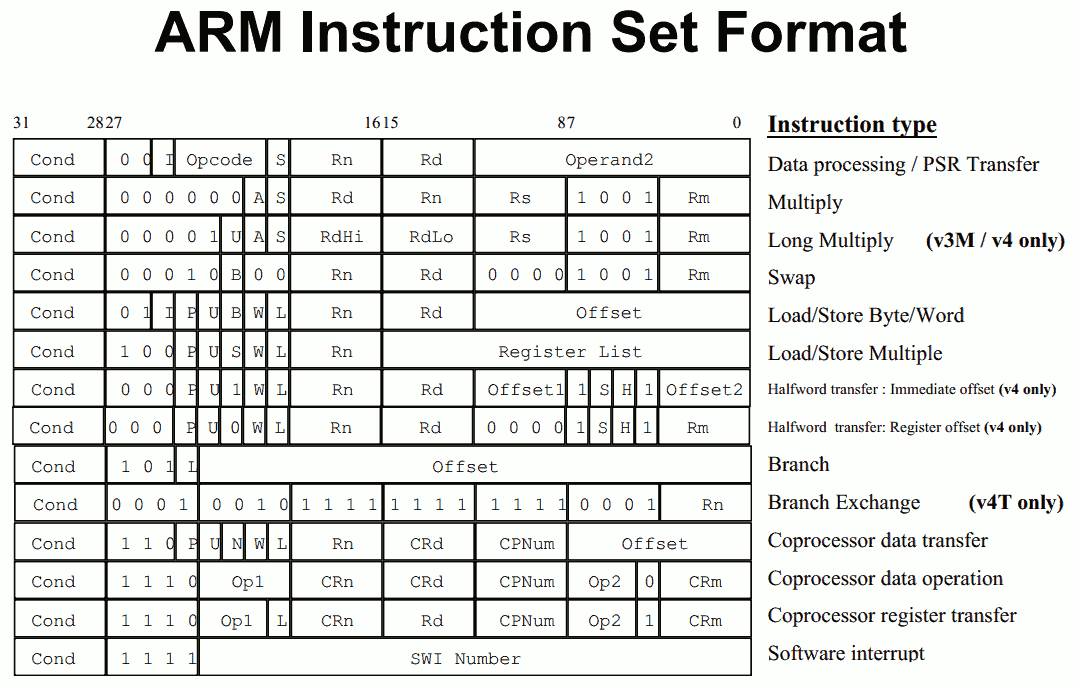

The fundamental difference between x86 and ARM is that x86 is a relatively complex ISA, while ARM is relatively simple by comparison. One key difference is that ARM dictates that every instruction is a fixed number of bits. In the case of ARMv8-A and ARMv7-A, all instructions are 32-bits long unless you're in thumb mode, which means that all instructions are 16-bit long, but the same sort of trade-offs that come from a fixed length instruction encoding still apply. Thumb-2 is a variable length ISA, so in some sense the same trade-offs apply. It’s important to make a distinction between instruction and data here, because even though AArch64 uses 32-bit instructions the register width is 64 bits, which is what determines things like how much memory can be addressed and the range of values that a single register can hold. By comparison, Intel’s x86 ISA has variable length instructions. In both x86-32 and x86-64/AMD64, each instruction can range anywhere from 8 to 120 bits long depending upon how the instruction is encoded.

At this point, it might be evident that on the implementation side of things, a decoder for x86 instructions is going to be more complex. For a CPU implementing the ARM ISA, because the instructions are of a fixed length the decoder simply reads instructions 2 or 4 bytes at a time. On the other hand, a CPU implementing the x86 ISA would have to determine how many bytes to pull in at a time for an instruction based upon the preceding bytes.

A57 Front-End Decode, Note the lack of uop cache

While it might sound like the x86 ISA is just clearly at a disadvantage here, it’s important to avoid oversimplifying the problem. Although the decoder of an ARM CPU already knows how many bytes it needs to pull in at a time, this inherently means that unless all 2 or 4 bytes of the instruction are used, each instruction contains wasted bits. While it may not seem like a big deal to “waste” a byte here and there, this can actually become a significant bottleneck in how quickly instructions can get from the L1 instruction cache to the front-end instruction decoder of the CPU. The major issue here is that due to RC delay in the metal wire interconnects of a chip, increasing the size of an instruction cache inherently increases the number of cycles that it takes for an instruction to get from the L1 cache to the instruction decoder on the CPU. If a cache doesn’t have the instruction that you need, it could take hundreds of cycles for it to arrive from main memory.

Of course, there are other issues worth considering. For example, in the case of x86, the instructions themselves can be incredibly complex. One of the simplest cases of this is just some cases of the add instruction, where you can have either a source or destination be in memory, although both source and destination cannot be in memory. An example of this might be addq (%rax,%rbx,2), %rdx, which could take 5 CPU cycles to happen in something like Skylake. Of course, pipelining and other tricks can make the throughput of such instructions much higher but that's another topic that can't be properly addressed within the scope of this article.

By comparison, the ARM ISA has no direct equivalent to this instruction. Looking at our example of an add instruction, ARM would require a load instruction before the add instruction. This has two notable implications. The first is that this once again is an advantage for an x86 CPU in terms of instruction density because fewer bits are needed to express a single instruction. The second is that for a “pure” CISC CPU you now have a barrier for a number of performance and power optimizations as any instruction dependent upon the result from the current instruction wouldn’t be able to be pipelined or executed in parallel.

The final issue here is that x86 just has an enormous number of instructions that have to be supported due to backwards compatibility. Part of the reason why x86 became so dominant in the market was that code compiled for the original Intel 8086 would work with any future x86 CPU, but the original 8086 didn’t even have memory protection. As a result, all x86 CPUs made today still have to start in real mode and support the original 16-bit registers and instructions, in addition to 32-bit and 64-bit registers and instructions. Of course, to run a program in 8086 mode is a non-trivial task, but even in the x86-64 ISA it isn't unusual to see instructions that are identical to the x86-32 equivalent. By comparison, ARMv8 is designed such that you can only execute ARMv7 or AArch32 code across exception boundaries, so practically programs are only going to run one type of code or the other.

Back in the 1980s up to the 1990s, this became one of the major reasons why RISC was rapidly becoming dominant as CISC ISAs like x86 ended up creating CPUs that generally used more power and die area for the same performance. However, today ISA is basically irrelevant to the discussion due to a number of factors. The first is that beginning with the Intel Pentium Pro and AMD K5, x86 CPUs were really RISC CPU cores with microcode or some other logic to translate x86 CPU instructions to the internal RISC CPU instructions. The second is that decoding of these instructions has been increasingly optimized around only a few instructions that are commonly used by compilers, which makes the x86 ISA practically less complex than what the standard might suggest. The final change here has been that ARM and other RISC ISAs have gotten increasingly complex as well, as it became necessary to enable instructions that support floating point math, SIMD operations, CPU virtualization, and cryptography. As a result, the RISC/CISC distinction is mostly irrelevant when it comes to discussions of power efficiency and performance as microarchitecture is really the main factor at play now.

408 Comments

View All Comments

nsteussy - Friday, January 22, 2016 - link

Well said.Wayne Hall - Friday, January 22, 2016 - link

WHAT IS MEANT BY PROFESSIONAL TASKS. I AM THINKING OF THE I-PAD PRO.gw74 - Friday, January 22, 2016 - link

Why do Apple only want content consumers' money in mobile, and not creators' too? Apple are in business to make a profit. If there was money to be made building workstation apps for mobile, it would happen. Furthermore "exploit their owners commercially" is just a pejorative way of saying "sell them stuff in return for money", i.e. "business".Sc0rp - Friday, January 22, 2016 - link

1) Apple made the pencil. I'm sure that they want creator's money too.2) The "Pro" market is incredibly small and fickle.

AnakinG - Friday, January 22, 2016 - link

I think Apple wants people to "think" they are creators and professionals. It's a feel good thing while making money. :)Constructor - Saturday, January 23, 2016 - link

The iPad Pro is a fantastic device for all kind of uses – I personally also use it as a mobile TV, streaming radio (due to its really excellent speakers), game console, internet and magazine reader (since it is pretty much exactly magazine-sized!), drawing board, note pad, multi-purpose communicator (mail, messaging, FaceTime etc.), web reader, ebook reader and so on...As to the numbers: According to an external survey it seems about 12% of all iPads sold in the past quarter were iPad Pros. We're talking about millions of devices there at the scale at which Apple is operating – most other tablet manufacturers and even PC manufacturers would kill for numbers like thes at prices like these!

It's almost funny how some people completely freak out about the iPad Pro because it crashes through their imaginary boundaries between their imaginary "allowed" kinds of devices.

The iPad Pro is a really excellent computer for the desktop (if for whatever reason an even bigger display is not available), which also works really well on my lap (actually much better and almost always more conveniently than a "laptop" computer!) and even in handheld use like a magazine or notepad. It's very light for its size, has a really excellent screen, excellent speakers and is fast and responsive.

Yeah, you can find things which you at this moment can't do on it yet. But there are many, many practically relevant uses at which it excels to a far greater extent than any desktop or notebook computer ever could.

jasonelmore - Sunday, January 24, 2016 - link

so you basically use it as a consumption and a communication device.. our point is, a professional cannot use this as their only computing device. they need PC's or MAC's to supplement it, which all use Intel or AMD.Until this fact changes, intel is far from being in trouble. iPad sales are in a huge slump as well, not just pc sales. Actually, Notebook PC sales are great, its the desktop that slows down every 3-4 years. iPhone is about to become a 0 growth product as well. Apple see's the writing on the wall, and that's why they are exploring cars, and other unknown products. The Chinese market never turned out like they had hoped, with stiff competition at low costs with similar quality.

Constructor - Sunday, January 24, 2016 - link

so you basically use it as a consumption and a communication device..Among many other things! So let me guess, when you happen to play some streaming music on your workplace computer or if you're watching the news on it, does it automatically turn into a "toy" and yourself into one of those mythical "only consumers" as well?

This silly ideology is really ludicrous.

our point is, a professional cannot use this as their only computing device. they need PC's or MAC's to supplement it, which all use Intel or AMD.

Nope.

Some portion of workplaces actually requires a desktop OS. This portion is not 100% but substantially lower than that.

A very large portion of workplaces (likely the majority) could very well use iPads as well, but external circumstances make regular PCs or Macs just more convenient and practical.

And some other portion can and does use mobile devices already now as their primary tools.

The tedious and absurd conclusion from people's own limited knowledge and imagination to absolute judgments of the entire market is anything but new, but it's really old news by now.

Until this fact changes, intel is far from being in trouble. iPad sales are in a huge slump as well, not just pc sales. Actually, Notebook PC sales are great, its the desktop that slows down every 3-4 years. iPhone is about to become a 0 growth product as well. Apple see's the writing on the wall, and that's why they are exploring cars, and other unknown products. The Chinese market never turned out like they had hoped, with stiff competition at low costs with similar quality.

You should seriously get better sources for your information as your imaginations are rather far off from actual reality.

Intel is already in a tightening squeeze between the eroding PC market (especially regarding its crumbling profitability) and the ever-rising development costs they face with their creaking x86 antiquity. That their CPU performance is stagnating at the same time is also increasingly problematic, too, since it puts another damper on the PC market.

When you're talking about Apple you clearly live in a different universe from the rest of us: Apple is actually booming in China while the cheap manufacturers have run into unexpected difficulties against them, and your other imaginations of Apple's doom are neither original nor do they have anything to do with the actual reality on the ground.

Even the iPad is a massive cash cow on a scale the competition can only dream about – it's just dwarfed by the absolute gigantic profits from the iPhone.

But yeah, surely that spells inescapable doom for the company.

Sure!

Coldmode - Friday, January 22, 2016 - link

This is the stupidest paragraph about computing I've ever read. It's equivalent to lamenting Bell's role in telephony because we managed to win World War 1 with telegraphs but now teenagers spend all their time hanging off the kitchen set chatting to one another about their crushes.ABR - Monday, January 25, 2016 - link

@ddriver I disagree with most of what you say in these contents, but, "today we have gigahertz and gigabytes in our pockets, and the best we can do with it is duck face photos," hits the nail on the head! The problem though is not that software developers don't try to do more, but that they can't make any money doing so. The masses just want to buy the latest duck photo app, and there's not enough of the pie left over to support much else. In the early days of the iPad this wasn't so, but nowadays take a look at the top charts in iOS to see what I mean. Games makes more than all others put together, and then even in categories like Utilities, you see mainly Minecraft aids, emoji texters, and a few web browser add-ons. Apple doesn't promote this in their advertising, but they do so in more subtle but effective ways like which apps they choose to feature and promote in the app store. In fact, the store is littered with all kinds of creativity- and productivity-unleashing apps if you search hard, but they all tend to die on the vine because they get swamped out by the latest glossy-image joke-text-photo-video apps and the developer loses interest.