Investigating Cavium's ThunderX: The First ARM Server SoC With Ambition

by Johan De Gelas on June 15, 2016 8:00 AM EST- Posted in

- SoCs

- IT Computing

- Enterprise

- Enterprise CPUs

- Microserver

- Cavium

Java Performance

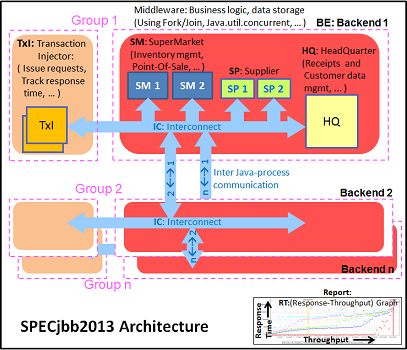

The SPECjbb 2015 benchmark has "a usage model based on a world-wide supermarket company with an IT infrastructure that handles a mix of point-of-sale requests, online purchases, and data-mining operations." It uses the latest Java 7 features and makes use of XML, compressed communication, and messaging with security.

We tested with four groups of transaction injectors and backends. The Java version was OpenJDK 1.8.0_91.

We applied relatively basic tuning to mimic real-world use, while aiming to fit everything inside a server with 64 GB of RAM (to be able to compare to lower end systems):

"-server -Xmx8G -Xms8G -Xmn4G -XX:+AlwaysPreTouch -XX:+UseLargePages"

With these settings, the benchmark takes about 43-55GB of RAM. Java tends to consume more RAM as more core/threads are involved. Therefore, we also tested the Xeon E5-2640v4 and ThunderX with these settings:

"-server -Xmx24G -Xms24G -Xmn16G -XX:+AlwaysPreTouch -XX:+UseLargePages"

The setting above uses about 115 GB. The labels "large" ("large memory footprint") report the performance of these settings. We did not give the Xeon D-1581 the same treatment as we wanted to mimic the fact that the Xeon has only 4 DIMM slots, while the Xeon E5 and ThunderX have (at least) eight.

The first metric is basically maximum throughput.

Notice how the Xeon D-1581 beats the Xeon E5-2640 in a typical throughput situation by 8%, while the SPECint_rate numbers told us that the Xeon E5 would be slightly faster. It is a typical example of how running parallel instances overemphasizes bandwidth. The extra 6 cores (@2.1 GHz) push the Xeon D past the Xeon E5 (10 cores@ 2.6 GHz) despite the fact that the Xeon D has only half the bandwidth available.

The ThunderX offers low end Xeon E5 performance, but that still a lot better than what we would have expected from the SPECint_rate numbers (Dual socket ThunderX = Xeon-D). Once we offer more memory to ThunderX, performance goes up by 14%. The Xeon E5 gets a 10% performance boost.

The Critical-jOPS metric is a throughput metric under response time constraint.

The critical jOPS is the most important metric as it shows how many requests can be served in a timely manner. At first we though that the lack of single threaded performance to run the heavier pieces of Java code fast enough is what made the ThunderX so much slower than the rest of the pack.

However, the 48 threads were mostly hindered by the lack of memory per thread. Once we offer enough memory to the 48-headed ThunderX, performance explodes: it is multiplied by 2.4x! The Xeon E5 benefits too, but performance is "only" 60% higher. Thanks to the DRAM breathing room, the ThunderX moves from "slower than low end Xeon D" to "midrange Xeon E5" territory.

82 Comments

View All Comments

silverblue - Thursday, June 16, 2016 - link

I think AMD themselves admitted that the Opteron X1100 was for testing the waters, with K12 being the first proper solution, but that was delayed to get Zen out of the door. I imagine that both products will be on sale concurrently at some point, but even with AMD's desktop-first approach for Zen, it will probably still come to the server market before K12 (both are due 2017).junky77 - Thursday, June 16, 2016 - link

still, quite strange, no? AMD is in the server business for years. I'm not talking about their ARM solution only, but their other solutions seem to be less interesting..silverblue - Thursday, June 16, 2016 - link

I am looking forward to both Zen and K12; there's very little chance that AMD will fail with both.name99 - Wednesday, June 15, 2016 - link

" It is the first time the Xeon D gets beaten by an ARM v8 SoC..."The Apple A9X in the 12" iPad Pro delivers 40GB/s on Stream...

(That's the Stream built into Geekbench. Conceivably it's slightly different from what's being measured here, but it delivers around 25GB/s for standard desktop/laptop Intel CPUs, and for the A9 and the 9" iPad's A9X, so it seems in the same sort of ballpark.)

aryonoco - Thursday, June 16, 2016 - link

Fantastic article as always Johan. Thank you so much for your very informative articles. I can only imagine how much time and effort writing this article took. It is very much appreciated.The first good showing by an ARMv8 server. Nearly 5 years later than expected, but they are getting there. This thing was still produced on 28 HKMG. Give it one more year, a jump to 14nm, and a more mature software ecosystem, and I think the Xeons might finally have some competition on their hands.

JohanAnandtech - Thursday, June 16, 2016 - link

Thank you, and indeed it was probably the most time consuming review ... since Calxeda. :-)Yes, there is potential.

iwod - Thursday, June 16, 2016 - link

Even if the ThunderX is half the price of equivalent Xeon, I would still buy Intel Xeon instead. This isn't Smartphone market. In Server, The cost memory and Storage, Networking etc adds up. Not only does it uses a lot more power in Idle, the total TCO AND Pref / Watts still flavours Intel.There is also the switching cost of Software involved.

And those who say Single Core / Thread Performance dont matter have absolutely no idea what they are talking about.

As far as I can tell, Xeon-D offers a very decent value proposition for even the ARM SoC minded vendors. This will likely continue to be the case as we move to 10nm. I just dont see how ARM is going to get their 20% market share by 2020 as they described in their Shareholder meetings.

rahvin - Thursday, June 16, 2016 - link

If you have to switch software on your severs because you switch architecture you are doing something wrong and are far too dependent on proprietary products. I'm being a bit facetious here but the only reason architecture should limit you is you are using Microsoft products or are in a highly specialized computing field. Linux should dominate your general servers.kgardas - Friday, June 17, 2016 - link

Even if you are on Linux, still stack support is best on i386/amd64. Look at IBM how it throws a lot of money to get somewhere with POWER8. ARM can't do that, so it's more on vendors to do that and they are doing it a little bit more slowly. Anyway, even AArch64 will mature in LLVM/GCC tool chain, GNU libC, musl libC, linux kernel etc but it'll take some time...tuxRoller - Thursday, June 16, 2016 - link

Aarch64 has very limited conditional execution support.http://infocenter.arm.com/help/index.jsp?topic=/co...