Investigating Cavium's ThunderX: The First ARM Server SoC With Ambition

by Johan De Gelas on June 15, 2016 8:00 AM EST- Posted in

- SoCs

- IT Computing

- Enterprise

- Enterprise CPUs

- Microserver

- Cavium

Java Performance

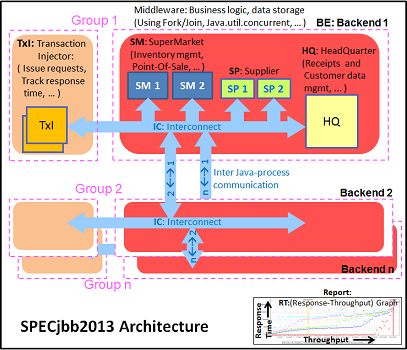

The SPECjbb 2015 benchmark has "a usage model based on a world-wide supermarket company with an IT infrastructure that handles a mix of point-of-sale requests, online purchases, and data-mining operations." It uses the latest Java 7 features and makes use of XML, compressed communication, and messaging with security.

We tested with four groups of transaction injectors and backends. The Java version was OpenJDK 1.8.0_91.

We applied relatively basic tuning to mimic real-world use, while aiming to fit everything inside a server with 64 GB of RAM (to be able to compare to lower end systems):

"-server -Xmx8G -Xms8G -Xmn4G -XX:+AlwaysPreTouch -XX:+UseLargePages"

With these settings, the benchmark takes about 43-55GB of RAM. Java tends to consume more RAM as more core/threads are involved. Therefore, we also tested the Xeon E5-2640v4 and ThunderX with these settings:

"-server -Xmx24G -Xms24G -Xmn16G -XX:+AlwaysPreTouch -XX:+UseLargePages"

The setting above uses about 115 GB. The labels "large" ("large memory footprint") report the performance of these settings. We did not give the Xeon D-1581 the same treatment as we wanted to mimic the fact that the Xeon has only 4 DIMM slots, while the Xeon E5 and ThunderX have (at least) eight.

The first metric is basically maximum throughput.

Notice how the Xeon D-1581 beats the Xeon E5-2640 in a typical throughput situation by 8%, while the SPECint_rate numbers told us that the Xeon E5 would be slightly faster. It is a typical example of how running parallel instances overemphasizes bandwidth. The extra 6 cores (@2.1 GHz) push the Xeon D past the Xeon E5 (10 cores@ 2.6 GHz) despite the fact that the Xeon D has only half the bandwidth available.

The ThunderX offers low end Xeon E5 performance, but that still a lot better than what we would have expected from the SPECint_rate numbers (Dual socket ThunderX = Xeon-D). Once we offer more memory to ThunderX, performance goes up by 14%. The Xeon E5 gets a 10% performance boost.

The Critical-jOPS metric is a throughput metric under response time constraint.

The critical jOPS is the most important metric as it shows how many requests can be served in a timely manner. At first we though that the lack of single threaded performance to run the heavier pieces of Java code fast enough is what made the ThunderX so much slower than the rest of the pack.

However, the 48 threads were mostly hindered by the lack of memory per thread. Once we offer enough memory to the 48-headed ThunderX, performance explodes: it is multiplied by 2.4x! The Xeon E5 benefits too, but performance is "only" 60% higher. Thanks to the DRAM breathing room, the ThunderX moves from "slower than low end Xeon D" to "midrange Xeon E5" territory.

82 Comments

View All Comments

JohanAnandtech - Wednesday, June 15, 2016 - link

Good suggestion. I have been using an ipmi client to manage several other servers, like the IBM servers. However, such a GUI client is still a bit more userfriendly, ipmi commands can get complicated if you don't use them regularly. The thing is that HP and Intel's BMC GUI are a lot easier to use and more reliable.fanofanand - Wednesday, June 15, 2016 - link

I think you may have an inaccurate figure of 141 at idle (in the graph) for the Thunder. "makes us suspect that the chip is consuming between 40 and 50W at idle, as measured at the wall"JohanAnandtech - Wednesday, June 15, 2016 - link

If you look at the Column "peak vs idle", you see 82W. At peak, we assume that a 120W TDP chip will probably need about 130W. 130W - 82W (both measured at the wall) = 50W for the SoC alone at idle measured at the wall, so anywhere between 40-50W in reality. My Calculation is a "guestimate", but it is clear that the Cavium chip needs much more in idle than the Intel chips.(10-15W) .djayjp - Wednesday, June 15, 2016 - link

Many spelling/grammar issues here. It impacts readability. Please read before posting.djayjp - Wednesday, June 15, 2016 - link

That is to say in the article.mariush - Wednesday, June 15, 2016 - link

These guys are already working on ThunderX2 (54 cores, 3 Ghz , 14nm , ARMv8) and they already have functional chips : https://www.youtube.com/watch?v=ei9uVskwPNEMeteor2 - Thursday, June 16, 2016 - link

It's always jam tomorrow, isn't it? Intel is working on new chips too, you know.beginner99 - Wednesday, June 15, 2016 - link

It loses very clearly in performance/watt to Xeon-D. In this segment the lower price doesn't matter in that case and the fact that it has a process disadvantage doesn't matter either. What counts is the end result. And I doubt it would cost $800 if made on 14/16nm. I mean why would anyone buying this take the risk? Safer bet to go with Intel also due to more flexible use (single and multi threaded). The latency issue is mentioned but downplayed.blaktron - Wednesday, June 15, 2016 - link

So downplayed. Anandtech desperately wants ARM servers, but its a solution looking for a problem. Big web front ends running on bare metal are such a small percentage of the server market that developing for it seems stupid. Xeon-D was already in development for SANs, they just repurposed it for docker and nginx.Senti - Wednesday, June 15, 2016 - link

Very nice article. I especially liked the emphasis on relations of test numbers and real world workloads and what was problematic during the testing.It would be great to see the same style desktop CPU review (Zen?) form you instead of mix of reprinted marketing hype with silly benchmark numbers dump that plagues this site for quite some time now.

Some annoying typos here and there, like "It is clear that the ThunderX is a match for high frequency trading", but nothing really bad.