The Apple iPad Pro Review

by Ryan Smith, Joshua Ho & Brandon Chester on January 22, 2016 8:10 AM ESTSoC Analysis: On x86 vs ARMv8

Before we get to the benchmarks, I want to spend a bit of time talking about the impact of CPU architectures at a middle degree of technical depth. At a high level, there are a number of peripheral issues when it comes to comparing these two SoCs, such as the quality of their fixed-function blocks. But when you look at what consumes the vast majority of the power, it turns out that the CPU is competing with things like the modem/RF front-end and GPU.

x86-64 ISA registers

Probably the easiest place to start when we’re comparing things like Skylake and Twister is the ISA (instruction set architecture). This subject alone is probably worthy of an article, but the short version for those that aren't really familiar with this topic is that an ISA defines how a processor should behave in response to certain instructions, and how these instructions should be encoded. For example, if you were to add two integers together in the EAX and EDX registers, x86-32 dictates that this would be equivalent to 01d0 in hexadecimal. In response to this instruction, the CPU would add whatever value that was in the EDX register to the value in the EAX register and leave the result in the EDX register.

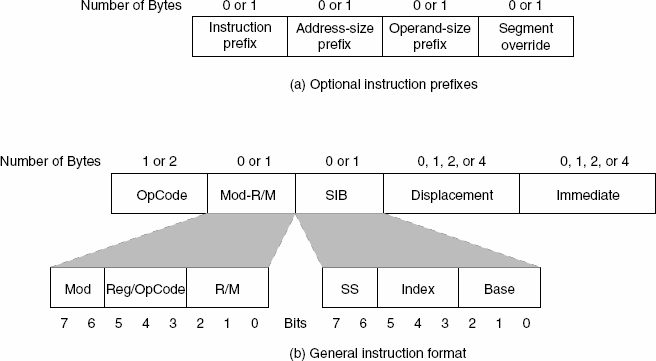

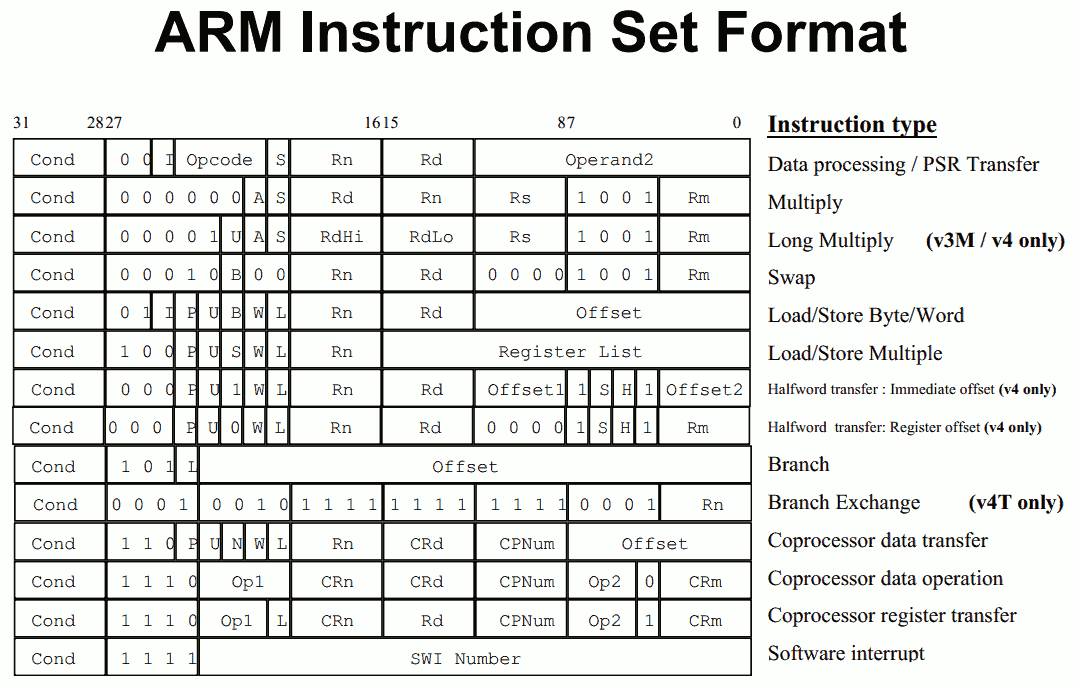

The fundamental difference between x86 and ARM is that x86 is a relatively complex ISA, while ARM is relatively simple by comparison. One key difference is that ARM dictates that every instruction is a fixed number of bits. In the case of ARMv8-A and ARMv7-A, all instructions are 32-bits long unless you're in thumb mode, which means that all instructions are 16-bit long, but the same sort of trade-offs that come from a fixed length instruction encoding still apply. Thumb-2 is a variable length ISA, so in some sense the same trade-offs apply. It’s important to make a distinction between instruction and data here, because even though AArch64 uses 32-bit instructions the register width is 64 bits, which is what determines things like how much memory can be addressed and the range of values that a single register can hold. By comparison, Intel’s x86 ISA has variable length instructions. In both x86-32 and x86-64/AMD64, each instruction can range anywhere from 8 to 120 bits long depending upon how the instruction is encoded.

At this point, it might be evident that on the implementation side of things, a decoder for x86 instructions is going to be more complex. For a CPU implementing the ARM ISA, because the instructions are of a fixed length the decoder simply reads instructions 2 or 4 bytes at a time. On the other hand, a CPU implementing the x86 ISA would have to determine how many bytes to pull in at a time for an instruction based upon the preceding bytes.

A57 Front-End Decode, Note the lack of uop cache

While it might sound like the x86 ISA is just clearly at a disadvantage here, it’s important to avoid oversimplifying the problem. Although the decoder of an ARM CPU already knows how many bytes it needs to pull in at a time, this inherently means that unless all 2 or 4 bytes of the instruction are used, each instruction contains wasted bits. While it may not seem like a big deal to “waste” a byte here and there, this can actually become a significant bottleneck in how quickly instructions can get from the L1 instruction cache to the front-end instruction decoder of the CPU. The major issue here is that due to RC delay in the metal wire interconnects of a chip, increasing the size of an instruction cache inherently increases the number of cycles that it takes for an instruction to get from the L1 cache to the instruction decoder on the CPU. If a cache doesn’t have the instruction that you need, it could take hundreds of cycles for it to arrive from main memory.

Of course, there are other issues worth considering. For example, in the case of x86, the instructions themselves can be incredibly complex. One of the simplest cases of this is just some cases of the add instruction, where you can have either a source or destination be in memory, although both source and destination cannot be in memory. An example of this might be addq (%rax,%rbx,2), %rdx, which could take 5 CPU cycles to happen in something like Skylake. Of course, pipelining and other tricks can make the throughput of such instructions much higher but that's another topic that can't be properly addressed within the scope of this article.

By comparison, the ARM ISA has no direct equivalent to this instruction. Looking at our example of an add instruction, ARM would require a load instruction before the add instruction. This has two notable implications. The first is that this once again is an advantage for an x86 CPU in terms of instruction density because fewer bits are needed to express a single instruction. The second is that for a “pure” CISC CPU you now have a barrier for a number of performance and power optimizations as any instruction dependent upon the result from the current instruction wouldn’t be able to be pipelined or executed in parallel.

The final issue here is that x86 just has an enormous number of instructions that have to be supported due to backwards compatibility. Part of the reason why x86 became so dominant in the market was that code compiled for the original Intel 8086 would work with any future x86 CPU, but the original 8086 didn’t even have memory protection. As a result, all x86 CPUs made today still have to start in real mode and support the original 16-bit registers and instructions, in addition to 32-bit and 64-bit registers and instructions. Of course, to run a program in 8086 mode is a non-trivial task, but even in the x86-64 ISA it isn't unusual to see instructions that are identical to the x86-32 equivalent. By comparison, ARMv8 is designed such that you can only execute ARMv7 or AArch32 code across exception boundaries, so practically programs are only going to run one type of code or the other.

Back in the 1980s up to the 1990s, this became one of the major reasons why RISC was rapidly becoming dominant as CISC ISAs like x86 ended up creating CPUs that generally used more power and die area for the same performance. However, today ISA is basically irrelevant to the discussion due to a number of factors. The first is that beginning with the Intel Pentium Pro and AMD K5, x86 CPUs were really RISC CPU cores with microcode or some other logic to translate x86 CPU instructions to the internal RISC CPU instructions. The second is that decoding of these instructions has been increasingly optimized around only a few instructions that are commonly used by compilers, which makes the x86 ISA practically less complex than what the standard might suggest. The final change here has been that ARM and other RISC ISAs have gotten increasingly complex as well, as it became necessary to enable instructions that support floating point math, SIMD operations, CPU virtualization, and cryptography. As a result, the RISC/CISC distinction is mostly irrelevant when it comes to discussions of power efficiency and performance as microarchitecture is really the main factor at play now.

408 Comments

View All Comments

ddriver - Saturday, January 23, 2016 - link

So in your expert opinion, all programs do is syscalls? No application logic, no application data? LOLAlso, API calls are NOT syscalls. Syscalls are requests to OS kernel, API calls are just regular calls to a library. Fundamentally different things.

FunBunny2 - Saturday, January 23, 2016 - link

-- Fundamentally different things.exactly the same: your not writing active code, but calling out to somebody else's code to do the work. in neither case does it matter what you're source language is, from a performance point of view. there's a reason that java mostly beats C++ these days.

Constructor - Saturday, January 23, 2016 - link

The main reason for that is the "well, it's fast enough, and if not we'll compensate with CPU upgrades" mentality in many projects.gistya - Sunday, January 24, 2016 - link

Why are we talking about Java and C++ here? Just curious.I recently worked on Google's j2objc project and it's pretty freaking slick. You can translate Java code into Objective C that compiles and runs pretty flawlesly on an iOS device, and it's fast. It's not emulated, it's actually a port of Android's core libs right into Objective C. Amazing work.

I started working with Swift recently and it's pretty cool itself. Apple's answer to C# and Java, basically. I like that they released it free and open source for Linux. It's weird to program in until you get used to the weird memory management stuff but, hey, code runs so much faster without garbage collection.

ddriver - Sunday, January 24, 2016 - link

Objective C is an atrocity. Moving away from it in favor of swift is one of the few moves apple can be commended for.Apple have taken advantage of native code, which has resulted in better user experience than android, even when their hardware was mediocre. Because native code is way better than java.

Relic74 - Saturday, February 27, 2016 - link

Developing apps that take advantage of the iPad Pro's hardware is just the tip of the iceberg. iOS needs a complete overhaul as in it's current state it's lacking just to many features to be considered anything approaching a Pro OS.The iPad Pro is my first iOS device, I've played with them over them years but I never really liked iOS, it just always felt extremely restrictive to me. When the Pro came out with iOS 9.2 I was intrigued and started to read up on it, the reviews were solid and everyone I talked to who owned one, really liked them. So I made the plunge and bought one for myself. Now I already have a tablet, the new Pixel C in which I really like, even though the reviews on that haven't been so super. The biggest complaint was that Android isn't really a productivity OS. I found it to be quite the opposite, it's an extremely capable machine. So when I read that the iPad Pro is pretty decent on productivity tasks, I thought well if they thought the Pixel C wasn't up for the task and it is, than the iPad Pro must be something special.

It's not, every reason why I avoided iOS all of these years is still present in the latest version, every single one. As I use CodeEnvy, a cloud based IDE to do most of my programming, I assumed the iPad Pro would be able to handle to handle my work flow. It's nothing outrageous what I'm doing or expect, simply using the CodeEnvy app, Prompt 2 (a terminal app) and Chrome. I also needed Excel to calculate trade PNL's. Within the first hour of using the iPad Pro it was more than apparent that it just wasn't meant for productivity work or at least nothing on the level that I required and didn't come lose to the Pixel C's abilities.

First, I needed to run the terminal app in the background, compiling apps can take a while, plus I run scripts and monitoring applications. However after 3 minutes iOS would terminate it's connections. After some research it seems only about 1% of the apps in the App Store can actually run in the background for extended periods of time, mostly GPS and music apps. Than their was the problem with app resolutions, more than 80% of them I had installed didn't support the iPad Pro's resolution. So again after some research it seems only about 10% of the apps available actually support it's resolution, these unsupported apps also use another keyboard, one that is extremely basic and missing many of the features of the systems default. Now, app developers are working on this problem but the real problem, which is that apps in iOS are resolution independent in the first place just isn't a good idea. However it seems that their is no other way to do it because of this so called Walled Garden Paradigm.

Apps in iOS are basically islands and in some weird way are even like OS's themselves, they basically have to fend for themselves With little contact to actual system except through hacks, okay, API's but it sure sounds like a hack to me. So every time a new feature is added to iOS app developers have to manually update their code to support it. Which brings me to the next issue, dual app view, only about 120 apps or so support it, again, we have to wait for the app developers. Now I'm not saying Android is the better option for any of you, it's all about preferences but when I enabled the dual app view feature in Android 6.0, every app from that moment on supported it. Further, every app I have installed into my Pixel C supported it's resolutions. When I connect a monitor to it, everything is supported, resolution, aspect ratio, I can even change the DPI to make it look more like a proper desktop UI and it supports extending the desktop, not just mirroring. Since the Pixel C has a USB C, I'm using the same port-replicator I bought for my MacBook 12" which has, HDMI, SD Card reader, 2 USB 3, mini USB and Ethernet, connecting a display to the Pixel C couldn't be easier. When I connected my monitor to the iPad Pro it looked like complete crap, black bars, the DPI was so large it looked like a child's toy and it just supported mirroring which absolutely sucks because you can't have two monitors.

File system or should I say lack of because except for iCloud, iOS doesn't have one, it depends on it's apps to manage them. This is absolutely ridiculous and frankly Apple should be ashamed of themselves for leaving it this way for the last 8 years. Dealing with files in iOS is a complete nightmare. Every time I grab a file from the cloud I end up creating at least 4 copies of the same files because when you send a file to an app, it sends a copy, leaving the original in the app your sharing from. So keeping track of which file is the latest version is impossible. Why am I sharing in the first place, on every single mobile device I've ever used, the sharing feature was used to send content to an online source, never was it used as a method to manage files, especially not as the primary method. Also I have yet to have seen an app that can Share to every compatible app installed, their always missing apps in the share list, why because unlike Android which creates it's Share lists dynamically on a system level, the app developers for iOS apps have to manually create a Share profile, which means apps can pick and choose which apps they want to support. When you install the DropBox client, every app that can create a file from that time on should be able to Share to it, period. Instead we have to wait for the app developers for everything in iOS. People say that Android is fragmented, fine but so is iOS, except in it's case, it's the apps that are fragmented. Anytime a new feature is added, every app should automatically be able to do it because the system manages it, not the apps.

The keyboard, I first bought the Apple keyboard, however I really didn't like the way it felt to type on, I missed having the function keys and the biggest issue, no backlite, something I simply cannot live with out as I type at night in bed a lot. It also doesn't provide any protection so I had to buy the hard case, 200 bucks + for a mediocre keyboard. So I bought the Logitech, a much, much better typing experience however there is one problem that became hugely apparent while using it. I wanted a mouse, not every time, just when the keyboard was connected. Why, well like Tim Cook said about notebooks with touchscreen's being a failed idea mostly do to poor ergonomics, the user has to constantly reach up to navigate the UI (get's old real quick), the iPad Pro, ironically, falls under the same category. Foot in mouth next time Tim, I'm sure you didn't realize at the moment that you were also talking about the iPad Pro but it's the same exact thing.

The Pencil, I'm not an artist so I can't really say if it's good or not. The one thing I do know is that I can't use it throughout the system. Something I desperately wanted to do, so in the drawer it went, instead I use a Wacom, has pressure sensitivity, palm rejection and writes great, no lag. In fact I can't tell the difference between the two when using apps like EverNote, Bamboo Paper, OneNote, etc. the iPad Pro is a finger print magnet, I just wanted a stylus to navigate the UI with, also without a mouse the Wacom is the closest thing I can get, works a lot better with it than without when using the keyboard, that's for sure. Is there an actual Apple device available without compromises, there is absolute zero excuse for not allowing the Pencil to function throughout the system. This idea that the iPad Pro is a touch device only completely fell apart the second Apple made the Pencil and keyboard, two accessories that break this touch only paradigm. The only reason why Apple is doing this is to save a little face from all these years of saying the stylus is garbage. This is also why I'm pissed that Pro doesn't have mouse support. The OS certainly supports it by the way, my brother has an iPad Air 2 which is JailBroken and he installed mouse drivers just fine, works great.

There is potential here, however even with great apps the blatant problems in iOS prohibit it from ever becoming a proper productivity tool. Now I fully realize that there are plenty of people that get by just fine with using the iPad Pro, I'm just not one of them. The Pixel C is a much more capable machine for what I do, I have every app that I need which by the way are the same exact apps I had installed on the iPad Pro so I don't get this, no apps for Android tablets thing, I have over 60 apps, all of them look and perform great. In fact, they actually look better on the Pixel C because they all support it's resolution. I have a stylus, the same Wacom. I use for the iPad Pro. I have an actual file-manager with all of my files in a single area, organized by folders. I can access my firms secure NAS drive using Open ID, I have all of my cloud storage, other computers, external HD's and FTP servers mounted as local assets, so when I click on save, the file is saved directly on whichever remote storage I choose. None of that, click on Share BS. When I'm editing a file and need to use more than one app, each app uses the same exact file, no creation of multiple files from Sharing, just open, edit, save, go to other app, open, edit, save. I can find any file in less than a minute, I can find every file that contains a persons name inside of the files In less than a minute. I tried to do this in iOS, I just gave up, finding files in iOS is like trying to find Noah's Ark in Turkey. Zipping and sending files in iOS, well, just also sucks, hopefully the files your sending aren't located in more than 2 apps. I use the Pixel C as a desktop computer as it has mouse support and looks great when connect to a monitor with extending desktop capabilities. Since I can run Linux desktop applications and quickly mind you, it actually makes for a decent desktop machine, however I just purchased an Nvidia Shield TV, installed Arch Linux on it and am now using that as my desktop computer. The performance of the Pixel C was so good when running Linux apps that the Shield TV was a no brainier. Yes, there are GPU drivers for it, in fact my CUDA applications work great on it. I can encode a video file using the GPU to compute faster than most laptops using their CPU's.

Write now I am compiling an app in the background, while downloading a 20GB .rar file to a connected HD, while streaming a movie directly from OneDrive without having to download it first, to my sons TV in his room, I have Gimp running on my monitor as I was editing a picture, (I'm running Arch Linux in a Chroot under Android, to use applications I just start them up through an X-Terminal, works great), while I'm typing this up in Chrome on the Pixel C itself. The iPad Pro doesn't come close to that level of multitasking, running two apps in a split screen view is a nice feature however I would give it up in heart beat to be able to run any and all apps in the background.

I'll end it here, the iPad Pro is still just an iPad, it's not a laptop replacement, it's not a productivity machine, it's an iPad. A content consumption device, just with a larger display. Those wanting one, wait, at least until version 2 comes out. There are just tom any issues that need to be worked out and most importantly, no apps that really take advantage of it.

boozed - Friday, January 22, 2016 - link

MaxiPad, surely.definitelyReal - Friday, January 22, 2016 - link

Lolxerandin - Saturday, January 23, 2016 - link

You win this comments section. Not really worth much, but it's better than the guys in here defending a product that has left most of everyone everywhere completely nonplussed.Constructor - Saturday, January 23, 2016 - link

Ideologue, much?I've simply bought it because I wanted to use it, and I do. Every single day. And it is fantastic.

If you're "nonplussed" by it, you're likely asking the wrong questions to begin with.