The Apple iPad Pro Review

by Ryan Smith, Joshua Ho & Brandon Chester on January 22, 2016 8:10 AM ESTSoC Analysis: On x86 vs ARMv8

Before we get to the benchmarks, I want to spend a bit of time talking about the impact of CPU architectures at a middle degree of technical depth. At a high level, there are a number of peripheral issues when it comes to comparing these two SoCs, such as the quality of their fixed-function blocks. But when you look at what consumes the vast majority of the power, it turns out that the CPU is competing with things like the modem/RF front-end and GPU.

x86-64 ISA registers

Probably the easiest place to start when we’re comparing things like Skylake and Twister is the ISA (instruction set architecture). This subject alone is probably worthy of an article, but the short version for those that aren't really familiar with this topic is that an ISA defines how a processor should behave in response to certain instructions, and how these instructions should be encoded. For example, if you were to add two integers together in the EAX and EDX registers, x86-32 dictates that this would be equivalent to 01d0 in hexadecimal. In response to this instruction, the CPU would add whatever value that was in the EDX register to the value in the EAX register and leave the result in the EDX register.

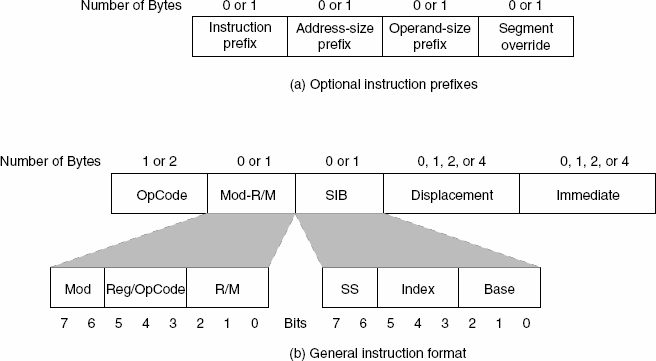

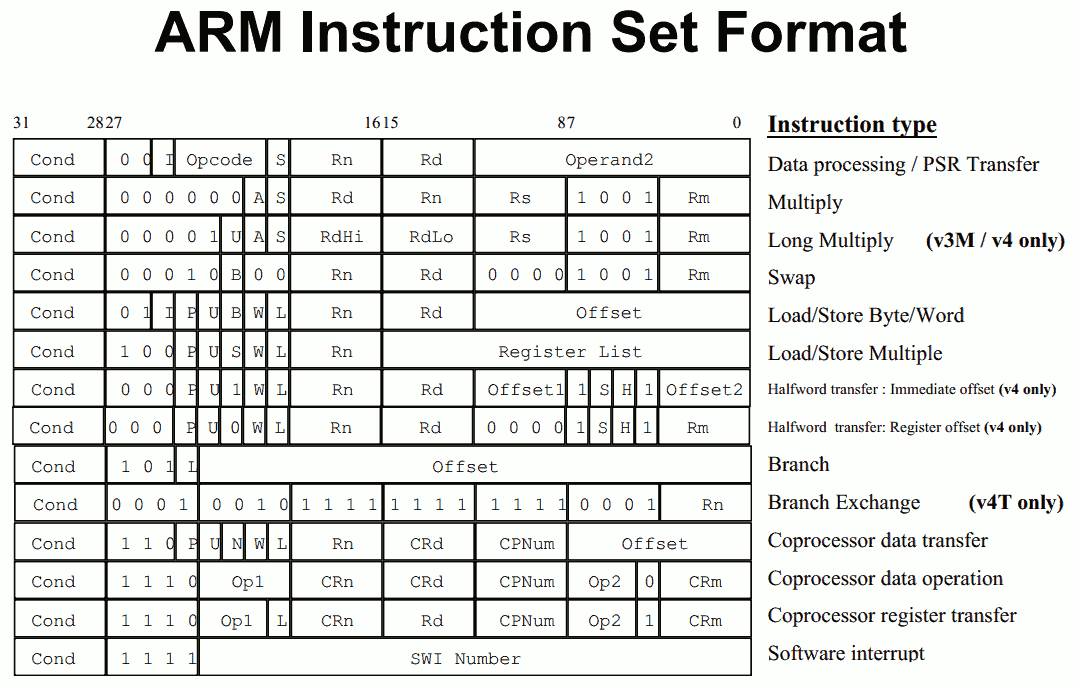

The fundamental difference between x86 and ARM is that x86 is a relatively complex ISA, while ARM is relatively simple by comparison. One key difference is that ARM dictates that every instruction is a fixed number of bits. In the case of ARMv8-A and ARMv7-A, all instructions are 32-bits long unless you're in thumb mode, which means that all instructions are 16-bit long, but the same sort of trade-offs that come from a fixed length instruction encoding still apply. Thumb-2 is a variable length ISA, so in some sense the same trade-offs apply. It’s important to make a distinction between instruction and data here, because even though AArch64 uses 32-bit instructions the register width is 64 bits, which is what determines things like how much memory can be addressed and the range of values that a single register can hold. By comparison, Intel’s x86 ISA has variable length instructions. In both x86-32 and x86-64/AMD64, each instruction can range anywhere from 8 to 120 bits long depending upon how the instruction is encoded.

At this point, it might be evident that on the implementation side of things, a decoder for x86 instructions is going to be more complex. For a CPU implementing the ARM ISA, because the instructions are of a fixed length the decoder simply reads instructions 2 or 4 bytes at a time. On the other hand, a CPU implementing the x86 ISA would have to determine how many bytes to pull in at a time for an instruction based upon the preceding bytes.

A57 Front-End Decode, Note the lack of uop cache

While it might sound like the x86 ISA is just clearly at a disadvantage here, it’s important to avoid oversimplifying the problem. Although the decoder of an ARM CPU already knows how many bytes it needs to pull in at a time, this inherently means that unless all 2 or 4 bytes of the instruction are used, each instruction contains wasted bits. While it may not seem like a big deal to “waste” a byte here and there, this can actually become a significant bottleneck in how quickly instructions can get from the L1 instruction cache to the front-end instruction decoder of the CPU. The major issue here is that due to RC delay in the metal wire interconnects of a chip, increasing the size of an instruction cache inherently increases the number of cycles that it takes for an instruction to get from the L1 cache to the instruction decoder on the CPU. If a cache doesn’t have the instruction that you need, it could take hundreds of cycles for it to arrive from main memory.

Of course, there are other issues worth considering. For example, in the case of x86, the instructions themselves can be incredibly complex. One of the simplest cases of this is just some cases of the add instruction, where you can have either a source or destination be in memory, although both source and destination cannot be in memory. An example of this might be addq (%rax,%rbx,2), %rdx, which could take 5 CPU cycles to happen in something like Skylake. Of course, pipelining and other tricks can make the throughput of such instructions much higher but that's another topic that can't be properly addressed within the scope of this article.

By comparison, the ARM ISA has no direct equivalent to this instruction. Looking at our example of an add instruction, ARM would require a load instruction before the add instruction. This has two notable implications. The first is that this once again is an advantage for an x86 CPU in terms of instruction density because fewer bits are needed to express a single instruction. The second is that for a “pure” CISC CPU you now have a barrier for a number of performance and power optimizations as any instruction dependent upon the result from the current instruction wouldn’t be able to be pipelined or executed in parallel.

The final issue here is that x86 just has an enormous number of instructions that have to be supported due to backwards compatibility. Part of the reason why x86 became so dominant in the market was that code compiled for the original Intel 8086 would work with any future x86 CPU, but the original 8086 didn’t even have memory protection. As a result, all x86 CPUs made today still have to start in real mode and support the original 16-bit registers and instructions, in addition to 32-bit and 64-bit registers and instructions. Of course, to run a program in 8086 mode is a non-trivial task, but even in the x86-64 ISA it isn't unusual to see instructions that are identical to the x86-32 equivalent. By comparison, ARMv8 is designed such that you can only execute ARMv7 or AArch32 code across exception boundaries, so practically programs are only going to run one type of code or the other.

Back in the 1980s up to the 1990s, this became one of the major reasons why RISC was rapidly becoming dominant as CISC ISAs like x86 ended up creating CPUs that generally used more power and die area for the same performance. However, today ISA is basically irrelevant to the discussion due to a number of factors. The first is that beginning with the Intel Pentium Pro and AMD K5, x86 CPUs were really RISC CPU cores with microcode or some other logic to translate x86 CPU instructions to the internal RISC CPU instructions. The second is that decoding of these instructions has been increasingly optimized around only a few instructions that are commonly used by compilers, which makes the x86 ISA practically less complex than what the standard might suggest. The final change here has been that ARM and other RISC ISAs have gotten increasingly complex as well, as it became necessary to enable instructions that support floating point math, SIMD operations, CPU virtualization, and cryptography. As a result, the RISC/CISC distinction is mostly irrelevant when it comes to discussions of power efficiency and performance as microarchitecture is really the main factor at play now.

408 Comments

View All Comments

xerandin - Saturday, January 23, 2016 - link

In what way did Microsoft saw Surface Pro parts off of other products? You know what's better than that "most stable, secure, and highest quality mobile OS?" For most people, that would be Microsoft Windows--even if they love to complain about it, you can't deny Microsoft's ubiquity in the Professional space (and home userspace, too, but we're trying to keep this in the professional sector, right?)I've heard a few people at work say that the sysadmins love Macs (it was a Director obsessed with his Macbook telling me this), but I can't seem to find any of these supposed Mac-lovers. It could have something to do that they're a nightmare to administer for most sysadmins running a domain (which covers the vast majority of sysadmins), or the fact that Mac users tend to be just as inept and incapable as Windows users, so you get to have another pain-in-the-ass group of users to deal with on a system that just isn't very nice to administer.

If you take off your hipster glasses for a moment and actually use computers in the world we live in, there's no way around the reality that Microsoft wins in the Professional space--including their beautifully made, super-powerful Surface Pro.

And no, I'm not a Microsoft shill, I just can't stand Apple fanboys with more money than sense.

gistya - Sunday, January 24, 2016 - link

Xerandin, you have no idea what you are talking about. 90% of the professional software development studios I work with are almost solely Mac based, for the simple reason that they barely need an IT department at all, in that case.Windows is technical debt, plain and simple. It's legacy cruft stuck to the face of the world. The only IT guys that hate Macs are the idiot ones who don't know bash from tsch and couldn't sudo themselves out of a wet paper box. Then there are the smart ones who know that a shift to such a low maintenance platform would mean their department would get downsized.

But so many companies are stuck on crap like SAP, NovellNetware, etc., that Microsoft could literally do nothing right for 10 years and still be a powerhouse. Oh wait.

Apple hardware is worth every extra cent it costs, and then some; if you make such little money that $500 more on a tool that you'll professionally use 8-12 hours a day for three years is a deal-breaker, then I feel very sorry for you.

But personally I think it's more than worth it to have the (by far) best screen, trackpad, keyboard, case, input drivers, and selection of operating systems. I have five different OS's installed right now including three different versions of windows (the good one, and then the most recent one, and the one that my last job still uses, which does not receive security patches and gets infected with viruses after being on a website for 10 seconds).

As for the iPad Pro, all of you fools just don't understand what it is, or why the pencil is always sold out everywhere, or what the difference is. As a software developer I can tell you that there is the most extreme difference; and that more development for iOS is being done now than ever before, and is being done at an accelerating pace. This is just version 1.0 of the large-size, pro type model for Apple, and those of us who did not buy it yet and who are still waiting for that killer app, are basically saying that well, once that app comes out, then heck yes we'll buy it. Do you seriously believe that no company will rise up to capitalize on that obviously large market? Someone will, and frankly lets hope it's not Adobe.

I regularly see iPad pros now in the hands of the professional musicians and producers I work with, and they are most certainly using them for professional applications. That's a niche to be sure, but everyone who thinks that the surface pro 4 (a mildly crappy laptop with a touchscreen that makes a bad, thick tablet and an underpowered, overheated laptop) is even remotely in the same category of device, is utterly smoking crack.

doggface - Sunday, January 24, 2016 - link

I think it might be you smoking the crack there mate. Cor, what a rant. Microsoft are pretty safe in enterprise and it has everything to do with managing large networks(1000s not 10s of computers.. Please, direct me to Apple's answer to sccm, please show me Apple's answer to exchange. Please show me an Apple only environment running 1000 different apps outside ofGoogle and Apple HQ. Just aint haopening.Constructor - Sunday, January 24, 2016 - link

IBM (yes, the IBM!) has just announced that they will switch over to Macs a while ago. And they're neither the first nor the last. Springer (a major german media company) had done that a while ago already for similar reasons (removing unproductive friction and cutting the actual cost of ownership due to less needed user support).I know that many people had imagined that Windows would be the only platform anyone would ever need to know, but that has always just been an illusion.

damianrobertjones - Monday, January 25, 2016 - link

Link to the article please! They're THINKING of using Apple for mobile use... .Constructor - Monday, January 25, 2016 - link

Nope. They're massively ramping up Mac purchases as well, with a target of 50-75% Macs at IBM. They are already full steam ahead with it:http://www.i4u.com/2015/08/93776/ibm-purchase-2000...

(Okay: The official announcements don't have that aspect, but an internal video interview with IBM's CIO leaked to YouTube makes it rather explicit even so.)

One of the motivators is apparently that despite higher sticker prices the total cost of ownership is lower for Macs (which is not news any more, but having IBM arriving at that conclusion still says something).

Oxford Guy - Wednesday, January 27, 2016 - link

Gartner said in 1999 that Macs were substantially cheaper in TCO.mcrispin - Tuesday, January 26, 2016 - link

doggface, you speak with confidence where that confidence isn't deserved. I've managed deployments of OS X way over 10k, there are plenty of places with deployments this high. JAMF Casper Suite is the "SCCM of Apple", I don't need an "Apple" replacement for Exchange, 0365 and Google are just fine for email. There are plenty of non-Apple/Google Apps for OS X and iOS. You are seriously misinformed about the reality of the OS X marketplace. Shame that.Ratman6161 - Wednesday, January 27, 2016 - link

mcrispin...sure you can find individual shops that have done big MAC deployments. but its anecdotal evidence. Its like looking at one neigborhood in my city and from that conclude that trailer parks are the norm in my city of 15,000.Take a look at this Oct 2015 article on Mac market share (I'm assuming you dont consider Apple Insider to be a bunch of Microsoft shills?) http://appleinsider.com/articles/15/10/08/mac-gain...

While touting how Mac is gaining market share they show a chart where in Q32015 they were at 7.6%. The chart is by company and even smaller Windows PC vendors Asus and Acer are at 7.1 and 7.4 respectively. Throw in Lenovo, HP and Dell at 20.3%, 18.5%, 13.8% and the 25.3% "others" (and others are not MAC's because Apple is the only company with those).

So IBM is doing 50 - 75% Mac's? OK Ill take your word for that but so what? In the larger scheme of things Apple still has only 7.6% and selling some computers to IBM isn't going to siginificantly change that number. Also, don't forgot that some companies (that compete with Microsoft in various areas) will not use a Microsoft product no mater how good it was.

No matter how you look at it, Windows is the main stream OS for busineses world wide. Touting the exceptions to that doesn't cange the truth of it.

Constructor - Wednesday, January 27, 2016 - link

Of course most workplace computers now are PCs. The thing is just that Macs are making major inroads there as well.$25 billion in Apple's corporate sales are already very far removed from your theory (and that's even without all the smaller shops who are buing retail!).