Intel Xeon E5 Version 3: Up to 18 Haswell EP Cores

by Johan De Gelas on September 8, 2014 12:30 PM ESTJava Server Performance

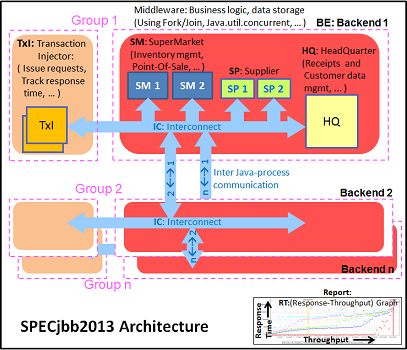

According to the documentation, the SPECjbb 2013 benchmark has "a usage model based on a world-wide supermarket company with an IT infrastructure that handles a mix of point-of-sale requests, online purchases, and data-mining operations". It uses the latest Java 7 features and makes use of XML, compressed communication, and messaging with security. We tested with four groups of transaction injectors and backends.

Several readers commented that we should try to optimize for lower response times instead of just optimizing for maximum throughput, so we have changed our relatively basic tuning. We left out "+AggressiveOpts" as this is still somewhat a risk for stability and the performance does not increase tangibly, and we used "-XX:+AlwaysPreTouch". Also we are more generous with the amount of allocated memory. These results are thus no longer comparable to our previous results. Our full parameters are:

"-server -Xmx8G -Xms8G -Xmn4G -XX:+AlwaysPreTouch -XX:+UseLargePages"

With these settings, the benchmark takes about 47GB-52GB of RAM. The first metric is basically maximum throughput.

Our new tuning has resulted in higher results, and all of the new Xeon scale well. However, if you start looking at it from a performance/watt perspective, the results are good but not spectacular. The power consumption of the Xeon E5-2695 v3 is similar to the Xeon E5-2697 v2, and the former has a 13% performance advantage.

The Critical-jOPS metric, is a throughput metric under response time constraint (SLA).

With our new tuning, the critical jOPS make a lot more sense, so we believe we have taken a step forward. Notice that the Xeon E5-2695 v3, despite its clock speed disadvantage (2.3 at least, 2.8 at the most), is capable of keeping up with the Xeon E5-2697 v2 (2.7 at the least, 3GHz at the most). The improvements in Haswell are measureable.

However, it must be said that while this is a step forward if you're buying a server, it's not a large one. You get 13% more throughput and the same response time for a few hundred dollars less (Xeon E5-2695 v3 vs E5-2697 v2).

85 Comments

View All Comments

LostAlone - Saturday, September 20, 2014 - link

Given the difference in size between the two companies it's not really all that surprising though. Intel are ten times AMD's size, and I have to imagine that Intel's chip R&D department budget alone is bigger than the whole of AMD. And that is sad really, because I'm sure most of us were learning our computer science when AMD were setting the world on fire, so it's tough to see our young loves go off the rails. But Intel have the money to spend, and can pursue so many more potential avenues for improvement than AMD and that's what makes the difference.Kevin G - Monday, September 8, 2014 - link

I'm actually surprised they released the 18 core chip for the EP line. In the Ivy Bridge generation, it was the 15 core EX die that was harvested for the 12 core models. I was expecting the same thing here with the 14 core models, though more to do with power binning than raw yields.I guess with the recent TSX errata, Intel is just dumping all of the existing EX dies into the EP socket. That is a good means of clearing inventory of a notably buggy chip. When Haswell-EX formally launches, it'll be of a stepping with the TSX bug resolved.

SanX - Monday, September 8, 2014 - link

You have teased us with the claim that added FMA instructions have double floating point performance. Wow! Is this still possible to do that with FP which are already close to the limit approaching just one clock cycle? This was good review of integer related performance but please combine with Ian to continue with the FP one.JohanAnandtech - Monday, September 8, 2014 - link

Ian is working on his workstation oriented review of the latest XeonKevin G - Monday, September 8, 2014 - link

FMA is common place in many RISC architectures. The reason why we're just seeing it now on x86 is that until recently, the ISA only permitted two registers per operand.Improvements in this area maybe coming down the line even for legacy code. Intel's micro-op fusion has the potential to take an ordinary multiply and add and fuse them into one FMA operation internally. This type of optimization is something I'd like to see in a future architecture (Sky Lake?).

valarauca - Monday, September 8, 2014 - link

The Intel compiler suite I believe already convertsx *= y;

x += z;

into an FMA operation when confronted with them.

Kevin G - Monday, September 8, 2014 - link

That's with source that is going to be compiled. (And don't get me wrong, that's what a compiler should do!)Micro-op fusion works on existing binaries years old so there is no recompile necessary. However, micro-op fusion may not work in all situations depending on the actual instruction stream. (Hypothetically the fusion of a multiply and an add in an instruction stream may have to be adjacent to work but an ancient compiler could have slipped in some other instructions in between them to hide execution latencies as an optimization so it'd never work in that binary.)

DIYEyal - Monday, September 8, 2014 - link

Very interesting read.And I think I found a typo: page 5 (power optimization). It is well known that THE (not needed) Haswell HAS (is/ has been) optimized for low idle power.

vLsL2VnDmWjoTByaVLxb - Monday, September 8, 2014 - link

Colors or labeling for your HPC Power Consumption graph don't seem right.JohanAnandtech - Monday, September 8, 2014 - link

Fixed, thanks for pointing it out.